Responsible AI in Private Equity

Author: Michelle Seng Ah Lee, Deployment Principal at WovenLight

Private equity is at a transformative juncture as artificial intelligence (AI) becomes increasingly integral to both in-house operations and portfolio company strategies. In-house use cases of AI among PE firms include streamlining due diligence, enhancing operational efficiencies, driving consistency in regulatory compliance, and informing deal teams’ decision-making. In PE-owned portfolio companies, AI is often central to their value creation plans, though often with varying levels of sophistication, as multiple industries are facing disruption through AI. In particular, with the launch of third-party Generative AI systems and Large Language Models (LLMs), the barrier to entry in leveraging cutting-edge AI is getting lower. In pre-deal phases, target companies often tout AI-driven value and efficiencies with ambitious future AI development pipelines. However, understanding and evaluating the risk profile of these AI claims can be complex, particularly when a target company lacks mature AI governance practices or full visibility into its AI’s impact. With the pressure to rapidly adopt AI, portfolio companies may rush into its implementation with little understanding of its potential risks. PE firms have the unique opportunity to help their portfolio companies by sharing clear guidelines, best practices, and recommended guardrails.

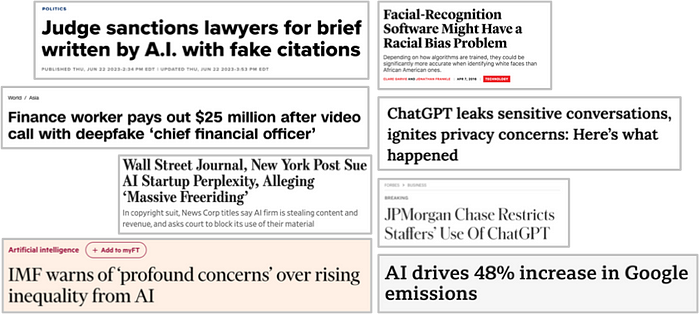

AI failures are now headline news and a board-level topic (See Image 1). With the increasing regulatory and public scrutiny on the potential risks and harms of AI, there have been growing concerns among GPs and LPs on whether there is sufficient governance in place to mitigate its risks. Firms are pressed not only to leverage AI effectively but also to mitigate potential risks such as operational risk from errors, regulatory noncompliance, privacy and cybersecurity vulnerabilities, and reputational damage that could erode investment value and trust in the organisation among investors. For senior leaders in private equity, the call for a more structured, responsible approach to AI is urgent as AI adoption scales both within firms and across their portfolio companies.

What is Responsible AI? Principles are not enough

We define Responsible AI as the development, deployment, and use of AI systems in ways that have a positive impact on society, minimize harm, and mitigate potential risks.

Here is what Responsible AI is not.

- It is not just an ESG risk. For some in private equity, the accountability for Responsible AI sits within their existing ESG function. While this may be a natural starting point for a conversation, AI has significant strategic, operational, and legal risks that need to be managed across functions.

- It is not only for AI technology companies. It is a cross-industry, board-level topic. Just because the AI technology capability is outsourced to a third party technology provider does not indicate that the liability and risks are external as well. In particular, for in-house use of AI by private equity firms, the FCA’s Senior Manager & Certification Regime (SM&CR) clearly allocates the responsibility and ownership over key decisions and impact to the senior private equity leaders.

- It is not science fiction. Contrary to news articles proclaiming potential existential risk of AI, the risks we discuss in this article are current and well-understood.

- It is not about ideas; it is about practice. Responsible AI should not be limited to superficial commitments to high-level principles. To avoid “ethics-washing,” Responsible AI principles should be meaningfully embedded into daily practices, accountability mechanisms, and governance frameworks. This is what many firms find the most challenging and will be discussed in the next section.

What are the risks of AI? Integrating AI risks into the enterprise risk framework

Responsible AI starts with turning high-level principles, such as fairness and transparency, into practical steps for the organization to mitigate its risks. While there are hundreds of Responsible AI frameworks and standards, there is general consensus around five key principles: beneficence, non-maleficence, justice, explainability, and autonomy. These principles include the commitment to have a positive impact, avoid potential harm (from poor design as well as unintended errors and breaches), test for discriminatory biases, be compliant with relevant laws and regulations, preserve the privacy and confidentiality of underlying data, to provide transparent explanations of AI-driven decisions, and to establish accountable roles and responsibilities for AI.

While the Responsible AI principles are well understood, many organizations struggle to operationalize them in practice. How do we take a vague and subjective principle such as fairness and translate it into guardrails, testing mechanisms, and robust processes? This is where traditional governance principles are helpful in framing the conversation.

The risks of AI may appear different to traditional technology and software risks. In addition, Generative AI does have certain nuances in its risks that distinguish it from other types of AI, requiring different types of guardrails and testing mechanisms. However, AI does not introduce an entirely new set of risks requiring separate governance; rather, it is important to consider AI risks within the context of existing enterprise risk frameworks. Table 1 contains the examples of AI risks, mapped to each enterprise risk category.

Given these risks, what does governance entail? While technology vendors for AI-as-a-service may focus on the technical mitigations, such as security and guardrails, it is important to take a holistic approach encompassing people, process, and technology (Image 2).

In addition to putting in place thoughtful AI architecture, effective guardrails, testing mechanisms, and monitoring systems, organizations also need the right processes in place, e.g. for use case risk assessment and approval. Employee training and awareness on the limitations of AI systems and establishing clear roles and responsibilities are equally important. In this sense, Responsible AI is not a technology problem, and it cannot be solved overnight. Responsible AI is an important transition process for an organization, rewiring the firm in its processes, talent, culture, and strategy.

What is in a Responsible AI Policy? A Guide for PE Firms’ In-House Use

The first step to AI governance is having a clear Responsible AI policy in place. A Responsible AI Policy should provide a framework for ensuring that AI technologies are deployed and used in a way that aligns with the firm’s values, legal obligations, and strategy. A set of guidelines for its portfolio companies should be applicable across industries with an emphasis on best practices. Here are some suggestions for inclusion:

- Purpose and scope: This section would outline the purpose of the policy and its link to other policies (e.g. data protection, privacy, etc.) and to organizational strategy.

In defining scope, there should be a clear distinction between the following types of AI:

(a) Open access AI: Publicly available AI platforms such as ChatGPT, Claude, Gemini, Midjourney, and DALL-E.

(b) Corporate licensed AI: AI platforms with specific licenses for corporate use, offering enhanced security and customization, such as Microsoft Co-Pilot, GitHub Co-Pilot, etc.

(c) Approved AI Vendors: Third-party providers with AI as either its central offering (e.g. Microsoft Co-Pilot) or an add-on (e.g. chatbot in a third party application) that have been vetted and approved by the company’s IT and Security teams.

(d) Commercial AI developer tools: Third-party providers of Generative AI services that have been vetted and approved by the company’s IT and Security teams.

(e) Open source AI: AI tools available under an open source permissive license, such as Llama-3 or Falcon-40B.

Different types of AI may warrant different restrictions, especially in data permitted in each platform. For example, corporate licensed AI may permit the uploading internal and non-confidential documents, but the policy may prohibit sharing of any internal documents to open access platforms. - Responsible AI principle definitions: This section would communicate the principles and promote greater understanding of the potential risks of AI.

- Prohibited uses: An organization may prohibit particular uses of AI and/or Generative AI, especially those with high legal and regulatory risk. This may include prohibited and/or high risk use cases as defined by the EU AI Act, including in employment. Publishing content generated by AI that may violate IP and copyright may also be prohibited.

- Risk assessment: There should be a rapid assessment process to understand the risk level of a proposed use case and a proportionate governance process. For example, well understood low-risk use cases, such as the use of Microsoft Co-Pilot for note taking, may be on a list of permitted use cases.

- Data handling and security: There should be clear guidance on the types of data permitted in each type of platform in alignment with the organizational data categories and sensitivity labels. The policy may also recommend that data should be anonymized where possible and that conversation history and cache should be cleared regularly.

- Intellectual property: The policy may specify that any output generated using company resources is the property of the firm.

- Transparency and disclosure: The policy may require employees to be transparent about their use of AI for work purposes, with explicit permission gained and documented if being used on confidential data.

- Training and awareness: AI fluency across all levels of the organization, from leadership to risk management to AI developers, is critical. Ongoing training may be conducted for employees — from senior leadership to risk teams to AI developers to other employees.

- Reporting and compliance: The firm may reserve the right to monitor the use of AI and Generative AI platforms for compliance. The policy should establish clear procedures and contact persons for reporting AI-related incidents (e.g. suspected data breaches and policy violations). Any consequences for non-compliance may be outlined.

- Policy review and updated date: The policy should state its frequency of intended update to reflect changes in technology, business needs, and regulatory requirements, with the date of last update specified.

A comprehensive Responsible AI policy at a private equity firm provides a structured approach to managing the ethical, social, and legal challenges associated with AI adoption, while also ensuring that AI is used to create long-term value. The policy should emphasize the importance of transparency, fairness, accountability, and sustainability, aligning AI investments with the firm’s broader mission and values, and fostering trust among stakeholders.

What is in Responsible AI Guidelines for portfolio companies? A Best Practice Approach

It is more challenging to define a policy for portfolio companies that may span a variety of industries, jurisdictions, and regulated areas. In addition, many PE firms take a “pull” rather than “push” approach with their portfolio companies, intending to guide the management teams without mandating and directing them. This requires a different approach to Responsible AI.

Guidelines for portfolio companies may include some elements of the above Responsible AI policy structure. However, it is more important to provide relevant industry examples of best practices and actionable insights. This document may more closely resemble a “library” of techniques and case studies rather than a policy. A PE firm may also have examples of prohibited use cases to help prevent the portfolio companies inadvertently venturing into territories that may carry high levels of compliance and reputational risks.

To bring this to life, consider the use case of driving operational efficiency for a customer service call centre, which has applicability across a wide range of consumer and business service companies. Non-AI technologies may include workforce management tools, automatic call distribution (ACD), and VoIP (Voice Over Internet Protocol) systems.

With AI, it may be possible to monitor phone conversations between customers and agents to provide real-time personalized insights from historical customer data, churn risk probability indicators, and scoring of the agent’s performance. However, given that the EU AI Act specifically calls out workforce monitoring and performance evaluation as high-risk AI use cases, the PE firm may warn portfolio companies that using AI to score agents would require them to follow substantial obligations under the EU AI Act.

A Generative AI chatbot may provide summaries of the customer’s previous interactions with the company and recommend next best actions. A generalized questionnaire could be provided for portfolio companies with customers in Europe to rapidly assess whether their intended use cases would fall under prohibited or high-risk use cases.

Some guidelines on best practices and privacy risks of using customer data could also be shared. For example, strict access control, restrictions on usage / sharing may be required for information on customer vulnerability in the UK for financial services companies. For the chatbot, guardrails such as confidence score calculations and explainability mechanisms may support the agent’s decision-making.

Key insights on Responsible AI

In closing, we share some of our key insights from our experience in Responsible AI. Our hope is that this will support organizations that are considering how to integrate Responsible AI into their strategies and practices.

Multidisciplinary and cross-functional approach is important for Responsible AI. Effective AI policy creation should bring together legal, technical, and operational perspectives. Relying solely on one team often leads to gaps — legal teams may over-emphasize risk aversion, while tech teams may lack business context, and ESG teams may not yet have deep AI expertise.

AI governance should be proportionate to its risks. There is a strategic risk that organizations will tend to overengineer governance processes and hinder innovation. Building high-impact AI applications will inherently come with higher risks, necessitating robust risk management frameworks. However, for low-risk use cases, proportionately accelerated processes can support experimentation while managing potential risks.

Third party risk management is vital for AI. When using AI-as-a-service (AIaaS), typically the most important risks, such as ethical considerations and fairness, remain with the organization, whilst the less important technology risks such as availability and scalability are outsourced. The key differences between AIaaS and building your own AI are that 1) You may have no insight into the model itself, 2) You may have no insight into the datasets used to train the AI, and 3) You may have no insight into how your own organization’s data will be used within the AI and handled in general. Ensuring that your governance framework is capable to adequately addressing these limitations is an essential element of a comprehensive approach. Consulting third-party risk and technology risk teams will be increasingly vital. Their expertise helps assess AI tools for vendor risks and ensure that appropriate contractual obligations are in place, ranging from cybersecurity issues to the need for interpretability and accountability in AI outputs.

As AI adoption scales, governance needs to scale as well. Initially, PE firms may assess AI systems on an ad hoc and manual basis. As AI adoption scales, continuous monitoring becomes essential, ensuring that potential risks are identified and managed in real time across systems. We recommended putting in place automated monitoring systems for key metrics in input data and on model robustness to automatically notify relevant owners for any unusual changes outside of expected boundaries.

The AI landscape is constantly evolving. AI governance policies should be regularly updated to reflect evolving regulations, consumer expectations, and cross-industry standards. Multi-national PE firms often wish to define a single universal policy, but there is an inherent conflict between the desired clarity and the complexity of the real world’s jurisdictional differences in both definition and interpretation. Global and multi-industry PE firms will face heightened challenges compared to regional players, underscoring the importance of adaptable AI governance frameworks.

AI strategy should build trust and resilience internally to encourage adoption. Having appropriate controls and automated guardrails in place can build higher levels of confidence in the AI systems internally and lead to greater adoption. A culture of skepticism around AI outputs can be tackled through improved explainability and robustness of AI systems.

Responsible AI can be an accelerator for innovation. Effective AI risk management isn’t just about compliance — it’s a powerful tool for accelerating AI-driven innovation across PE firms and their portfolios. AI adoption can be hindered by fear and a lack of understanding of AI, paralyzing the firm with fear of the unknown risks. AI has been and can be used to drive meaningful improvements in MOIC, IRR, and DPI in private equity. However, often the difference between organizations’ AI adoption is — not just capability — but rather risk appetite. Organizations with established AI governance can scale and accelerate AI adoption, with confidence from the leadership in AI outputs and a risk-proportionate process to ensure the proposed AI system is aligned to organizational strategy and risk appetite.